In the exciting blend of Artificial Intelligence and creativity, Text-to-Image (txt2img) technology is making waves, completely changing the game when it comes to how we imagine and bring our creative ideas to life.

As I’ve been exploring the world of AI imagery, I’ve seen txt2img technology skyrocket since 2023. It’s been fascinating to witness the buzz it creates among designers and AI enthusiasts. But, it’s not all smooth sailing. While txt2image is great at turning ideas into pictures, it sometimes struggles to fully grasp what the creator had in mind, showing there’s room for improvement.

As we dig into how txt2img technology works, its growth, and the hurdles it faces, let’s also talk about image-to-image (img2img) technology. It’s not just another thing; it actually helps us make our wildest ideas a reality even better. So, let’s dive into this journey together, recognizing what txt2img does well, and looking forward to the cool stuff img2img tech has in store for us.

1. Txt2img: Bridging Dreams and Reality

Txt2img models function by integrating a language model that interprets the input text into a latent representation, with a generative image model that then produces an image based on this representation [1]. The effectiveness of these models stems from their training on massive datasets of image and text pairs scraped from the web, enabling them to generate detailed and high-quality images from textual prompts.

Emerging in the mid-2010s, this technology harnesses the power of deep neural networks to turn descriptive language into vivid images. By 2022, leading-edge txt2img models like OpenAI’s DALL-E 2 [2], StabilityAI’s Stable Diffusion [3], and Midjourney [4] have impressively bridged the gap between AI-generated imagery and the authenticity of real-life photos or handcrafted art.

For designers, artists, and anyone who loves to create, txt2img is like a trusty sidekick. It helps turn ideas into visuals quickly, skipping over the usual hurdles of manual drawing or navigating complicated design software. This tool makes creating art more accessible to everyone, sparking a new wave of creativity in the digital world.

2. Txt2img: Encountering Creative Challenges

Txt2img technology, while innovative, encounters its own set of challenges. Its core process, transforming text into images through complex algorithms, sometimes struggles to capture the full spectrum of human creativity. This process, often seen as a “black box,” can lead to unpredictable results that may not fully align with the creator’s vision.

| Aspect | Txt2img Challenges | Img2img Advantages |

| The Ambiguity of Text Prompts | Vague and subjective prompts lead to varied and inaccurate visuals. | Using visual inputs, aligning outputs closer to the intended design. |

| Real-World Design Scenario | Struggles to convert complex ideas into clear text, losing detail and accuracy. | Direct image manipulation, maintaining complex details. |

| The Iterative Dilemma | Starting from scratch, making refinements slow and unpredictable. | Easy and fast refinements directly on images. |

2.1 The Ambiguity of Text Prompts

The inherient ambiguity of text prompts poses a significant challenge. The subjective nature of language means that identical prompts can produce largely different visuals, depending on individual interpretation. This variability is intensified when AI attempts to visualize these prompts, relying on its training data to infer meaning. A study I recently explored shines a spotlight on this very challenge, underlining how natural language’s vagueness can confuse AI, leading to images that stray far from our intended meaning [5].

For instance, consider a seemingly straightforward prompt like “a party in the forest.” Simple, right? Not exactly. The ambiguity here is multi-layered. Does “party” refer to a group of people celebrating, or perhaps, a gathering of animals in a whimsical sense?

And the “forest” – is it a lush, dense jungle or a serene, springtime woodland? These questions highlight the ambiguity arising from semantics (what words mean) and underspecification (details left out because they’re assumed to be understood).

But it doesn’t stop there. The study points out that syntax (how words are structured) also plays a role in creating ambiguity. A phrase as simple as “a red ball and pen” can leave us guessing: Is the pen also red, or is it just the ball that’s red? AI, in its current state, struggles with these nuances, often defaulting to the most common interpretations seen in its training data, which may not align with the unique or creative intentions behind our prompts.

This deep dive into the issue of ambiguity in txt2img prompts underscores a pivotal challenge in bridging human creativity with AI’s interpretative capabilities. It’s a reminder of how our everyday language, rich with implied meanings and shared contexts, poses a complex puzzle for AI to solve.

2.2 The Limitations of Text Prompts in Real-World Design Scenario

Beyond this, the txt2img process faces inherent limitations in real-world design scenarios. The main challenge is accurately converting intricate visuals in mind into specific text prompts, along with the randomness of the generated images the struggle to fine-tune them effectively.

This can be illustrated with the txt2img process: First, turning a vivid idea, intention, or image in your head into a text prompt. When the image you’re trying to create is complex and detailed. You might struggle with how to express it, end up with descriptions that are unclear, incomplete, or too general, and lose important details in the translation. The next step, converting the text prompt into an image. Although the fidelity and accuracy of txt2img are both advancing and have improved a lot, there’s still a risk of losing details or ending up with something that doesn’t match the designer’s original vision.

2.2.1 Using Txt2img to Design a Porsche

Using a specific design scenario might give us a clearer picture. Imagine you’re the lead designer for Porsche, and you’ve got an image in your head of a beautiful, world-famous sports car (like the one shown below). How would you use txt2img to bring this vision to life?

Generating an image of a car seem like a piece of cake for txt2img. However, for design scenarios that demand high accuracy in structure and detail, such as car concept drawings, using txt2img could spell disaster.

How would you describe the iconic Porsche front? It looks broad from the front but flat and protruding from the side. How about the car’s streamlined body shape, where lines sharply rise or smoothly fall? Or the compact rear with its intricate designs, including the spoiler’s position and shape? Then there’s the task of describing the lights: What shape are the headlights? Where do the linear taillights sit on the rear? What’s the height of the taillights?

Even if you managed to convert every detail in your mind into text (though the prompt might be as long as a paper), and sent this massive document to an AI image generator and hit generate, you then lose control over the visual outcome. As mentioned earlier, it’s like a black box; you don’t know what kind of car images the AI’s model was trained on, how it will translate your detailed prompt, or how it will turn that into a car image. Plus, even as AI’s fidelity to prompts improves, and you specify perspectives and distances, it’s still tough to get a car rendering that satisfies all details, with the exact viewpoint and distance you wanted. Not to mention, design is a process of constant revisions, needing continuous experimentation to achieve the desired image.

The txt2img design process feels more like a designer’s ordeal, having first to convert already vivid mental images into abstract, vague, lengthy, and uncontrollable text prompts, and then hoping that this translation makes sense to the AI. You pray to find an image close to your intention and imagination among the outputs. But let’s face it, no designer would tolerate such torment, nor does any designer complete their design tasks this way.

2.2.2 Use Img2img to Design a Porsche: More Tailored to Designers’ Habits

For designers, sketching out the ideas in their heads is far more efficient, intuitive, and controllable than wrestling with cumbersome and unrewarding prompts. The act of sketching is the real design process, where designers unleash their creativity and imagination. Completely replacing this process with AI might strip away the sense of creativity, randomness, and humanity from the design process. This could be why some designers resist AI, as handing over text prompts to AI for direct image generation can lead to outcomes that are too similar due to similar training data, potentially burying the designer’s original ideas under a pile of soulless duplicates.

Img2img, however, preserves the sketching phase, enabling the rapid, cost-effective completion of style experiments, effects, and rendering into more complete images. Designers maintain a high level of control over the image, using the sketch as the most accurate and comprehensive prompt for effective collaboration with AI.

As the saying goes, “A picture is worth a thousand words.” Let’s see how img2img can be used to complete the Porsche design mentioned earlier. You start with a rough design of the Porsche in your mind, then quickly produce a sketch. During this process, you can precisely control details like the lines and shape of the car, the design of the front and rear, and the form and placement of the lights, with the flexibility to make changes. After that, you pass this sketch to an AI image generator. The AI uses your sketch as the primary reference, and you can add text prompts for color, background, material, etc., to get closer to what you envisioned. With just one click, the AI helps you swiftly turn your idea into a complete design.

Imagine, with the future development and application of brain-computer interfaces, AI img2img combined with these interfaces might even eliminate the need for sketching, directly transforming the images in your mind into concrete, visualized images. This shows that img2img offers faster and more convenient processes, stronger detail control, and better alignment with designers’ habits, holding great potential to integrate into their workflows.

2.3 The Iterative Dilemma of Txt2img Workflow

2.3.1 Navigating Txt2img’s Iterative Hurdles

Furthermore, the iterative nature of design and art creation doesn’t mesh well with txt2img’s limitations. The need for constant adjustments, variations, and refinements—an integral part of the creative process—becomes cumbersome. Each time you seek to refine or alter your design, you’re essentially starting from scratch with a new prompt, hoping the AI will somehow land closer to your vision. It’s a bit like playing a game where the AI throws you a curveball with every iteration—sometimes it adds the detail you wanted, but other times it drops something you loved in the previous version. It’s an exciting yet unpredictable process, serving more as a source of inspiration and rough drafts rather than seamlessly integrating into the workflow of a designer.

2.3.2 Effortless Iteration with Img2img

Imagine the convenience if modifications could be made directly on the image. Enter the local img2img function with Generative Fill, which allows for on-the-spot adjustments by painting over the areas you wish to change, then generating the desired details through text prompts, sketches, or brushstrokes. To be more specific, text-based generative fill is kinda like doing a spot of txt2img, but it’s all happening on top of your original image’s layout and scene—so it’s also img2img, too.

Take, for example, this perfume design. After smoothly turning a sketch into a refined product image, we noticed some details needed tweaking. For instance, we realized frangipanis don’t have stamens. So, we directly used the removal tool on the generated image to get rid of the stamens. Additionally, the perfume bottle cap seems too bulky, and we prefer a transparent texture. Thus, we use the generative fill tool and input the prompt: “glassy perfume bottle cap.” Consequently, we end up with such a product image, making industrial design effortless.

2.3.3 Evolving Beyond Text: The Future of Img2img Editing

In the current img2img setup, the generative fill tool relies on entering text prompts, acting as a sort of localized txt2img. However, looking ahead, we’ll have access to even more flexible and precise generative fill tools. These future versions will allow us to make modifications with sketches or brushstrokes right where we need them.

For sketch-based Generative Fill, your sketches guide the AI, using img2img tech to integrate these drawings into the original image’s style seamlessly. And with brushstroke-based Generative Fill, you’re basically painting shapes on your canvas, and Generative Fill whips up matching elements.

These approaches will be covered in more detail later. Sketch and brushstroke methods are still being refined but are set to simplify and streamline the local img2img editing process in the near future. This technique ensures the original image’s structure and style remain intact while giving designers the freedom to adjust details directly, making it easier to bring creative ideas to life without overcomplicating the process.

3. Img2img: the New King in AI-Driven Graphic Design

3.1 Img2img introduction: Beyond Textual Boundaries

In the previous section, we’ve navigated the unique capabilities and limitations of txt2img, setting the stage for a deeper exploration into a technology that breaks those bounds. Having witnessed img2img’s potential through numerous examples, it’s time to formally show its transformative power in the digital creation process.

Recall the challenge of conveying a complex vision through mere words—a task akin to painting in the air. Txt2img helped turn ideas into creations, but it often meant beginning from zero, facing the unpredictability of AI. As they say, a picture is worth a thousand words; we often find our inspiration in images directly.

This is where the prowess of image-to-image (img2img) technology comes to the forefront. Consider it not just a tool, but a collaborative partner that understands the nuances of your vision, enabling you to refine, enhance, and transform images with precision. Img2img acts as an intuitive extension of your creative process, capturing your edits and ideas and manifesting them directly on the digital canvas. It represents a leap forward, offering a seamless melding of your imagination with AI’s capabilities, and empowers you to experiment rapidly and cost-effectively, thereby overcoming the limitations faced with txt2img.

3.2 How img2img works

An input image and a text prompt are supplied as the input in img2img. The generated image will be conditioned by both the input image and text prompt. In the diffusion process, all it does is set the initial latent image with a bit of noise and input image. Setting denoising strength to the maxium is equivalent to text-to-image because the initial latent image is entirely random. By tuning down the denoising strength, we allow the initial latent image to retain the statistical characteristics of the input image, resulting in a new output image that bears similarity to the input one.

Another set of important tools for img2img are control models such as ControlNet [6]. These models, functioning as a complete neural network structure, takes charge of substantial image diffusion models, like Stable Diffusion, to grasp task-specific input conditions. These models introduce a groundbreaking avenue for accurately governing elements like pose, texture, and shape in generated images. In short, thanks to ControlNet and its equivalents, users can define which specific characteristics of the input image they want to preserve in the output generations.

3.3 How to use img2img

3.3.1 Upload or generate an image

Upload the image you wish to transform, or you can directly generate a new one in AI image generators. The original image will serve as the foundation for the img2img process, and you can adjust the style, fine-tune the detail, or create a new ambiance of it.

3.3.2 Choose a Style and Write Prompts

A powerful feature of img2img is the AI photo filter. That means, you can utilize AI’s power to change your photo’s style in just one click. While the image itself serves as a powerful prompt containing most of the information, you can still add text prompts to more precisely describe the style and colors of the scene.

For instance, in the process of converting a sketch to a finished image, you can choose realistic style, and add descriptions about the colors to achieve the image you like. Additionally, you can adjust the degree of structure match for the image; lowering this will yield a more creative outcome.

3.3.3 Generate and Fine tune

Click to generate, select your desired outputs, and you can then proceed to make local, detailed img2img adjustments on the generated image while keeping the rest of the structure unchanged.

3.4 Img2img in Action: Shines Where Text2img Falters

Img2img technology represents a leap forward in visual design, overcoming the challenges faced by text-to-image (txt2img) methods. Unlike txt2img, which can struggle to capture complex visual details through text descriptions, img2img works directly with images. This approach allows for a more intuitive and flexible design process.

Whether it’s refining the identity of a brand, envisioning the latest fashion line, reimagining an interior space, or crafting compelling visual narratives for posters, img2img stands as a powerful tool that transforms imagination into tangible reality with unmatched precision and creativity.

| Design Area | Challenge with Txt2img | How Img2img Solves It |

| Logo Design | Struggles with capturing abstract shapes and precise lines. | Starts with existing logos, shapes or sketches for iterative refinement. |

| Fashion Design | Limited by textual descriptions for creative outfits. | Transforms model images with added clothing into realistic outfits. Turns sketches into refined visuals. |

| Interior Design | Hard to convey spatial arrangements and texture details. | Converts basic layouts to detailed interiors, easily adjusting furnishings. |

| Poster Design | Difficulty in capturing a poster’s detailed theme accurately. | Transforms images into custom posters, tweaking styles and elements. |

3.4.1 Logo Design

In the bustling marketplace of brands, a logo serves as the cornerstone of a company’s visual identity, encapsulating its essence in a glance. Crafting a logo that resonates and remains etched in memory is paramount.

While the text-to-image (txt2img) approach offers a broad canvas for creativity, it often stumbles when faced with the intricate task of logo design. Describing abstract shapes, precise lines, and geometric forms in words can be not only cumbersome but also limits the depth of creative exploration.

On contrary, img2img transformations bypass the limitations of textual descriptions, offering the fluidity of visual manipulation. Starting with an existing shape or a rough sketch, designers have the freedom to iterate, modify, and refine with unparalleled ease.

Check out the examples below where outcomes were all transformed from an original, yet lackluster logo picture, by applying various visual effects with img2img.

The first one, we uploaded the image of Dzine’s logo. We aimed to keep the logo’s “S” shape while adding a sense of design and freshness. By utilizing img2img, adjusting the structure match settings higher, and specifying a desert texture in the prompt, we achieved the stunning results seen in the last two images.

For text-based elements like the “Dzine” title, img2img technology is equally effective. We don’t have to worry about issues that might arise with txt2img technology, such as whether the correct text can be generated or if the style and font match our desired look. Simply by importing the title image, selecting a style, and adding a prompt description if necessary, we can transform it into stunning effects like flames or glaciers with rich detail.

Additionally, img2img technology excels even with logos that are more doodle or line-heavy. Take this bird logo as an example: img2img not only enhanced its three-dimensional effect and theme colors but also added a charming bird in just the right spot, perfectly aligning with the logo’s concept. We can see that using img2img for logo design makes it easier to find inspiration and design logos that are lively and interesting.

In summary, these examples demonstrate that beginning with a concrete shape or a simple draft facilitates a smooth evolution into a polished logo, encapsulating the essence and values of the brand. Seen from this perspective, img2img emerges as a powerful tool in a designer’s toolkit, supporting a design process that is both efficient and exploratory.

3.4.2 Fashion Design

In the realm of fashion design, the advent of img2img technology offers an innovative avenue for creativity and presentation.

In terms of creativity, img2img offers designers a seamless workflow for uncovering inspiration. We’ve seen that the traditional method of generating outfit ideas often involves experimenting with different clothing combinations or searching through magazines and websites for inspiration. With img2img, designers can incorporate runway images, magazine photos, and mood board elements directly onto their workspace. Supported by powerful image editing tools and a layer system, they can effortlessly collage and experiment with various styles, such as futuristic or retro looks. Additionally, features like variation allow for the exploration of more variants and derivatives of a single image with just one click.

When it comes to presentation, img2img possesses unique advantages. In traditional workflows, even with a clear design concept, bringing it to life as a visualized fashion design requires sketching, rendering and even physical fitting. What’s more, the trial-and-error method involved in physical fittings can be burdensome and might not be accessible to everyone, owing to considerations like expense, size availability, or the availability of a broad assortment of items. However, img2img offers flexible options: you can combine your favorite clothing elements on a model’s base image and use img2img to transform it into a realistic model picture; or you can start with a sketch and convert your creative ideas into polished renderings.

Let’s discuss the first option with the following example: As shown in the image, I’ve combined my favorite clothing elements on a model’s base image. This step is not much different from what you’d do with traditional image editors, but what I aim to achieve goes beyond merely overlaying images.

Through img2img, I directly transform this collage into a coherent and complete model outfit visualization. As seen in the right image, img2img perfectly retains the clothing’s color, pattern, and texture, making it shine on the chosen model. Furthermore, you can continue to collage images on this effect picture, make detailed adjustments with generative fill, and explore more fashion ideas through variations.

Now, let’s explore the latter option, starting from a sketch. Previously, we experimented with using sketch to image for industrial designs like cars and perfumes. Now, let’s try it with fashion design. As shown below, I start with a sketch of a dark green velvet evening gown. Using img2img, I transform it into the realistic photo on the right. You can see that the design of the dress is well preserved, making it suitable even for use as a product image.

The img2img approach in fashion design significantly enhances the process of creating and visualizing outfits. By digitally merging selected clothing items onto a model’s base image, it opens up endless possibilities for styling and promotes sustainable fashion by reducing the need for physical trials. This method is faster and more cost-effective, democratizing fashion design, making it accessible to anyone regardless of their resources or technical skills.

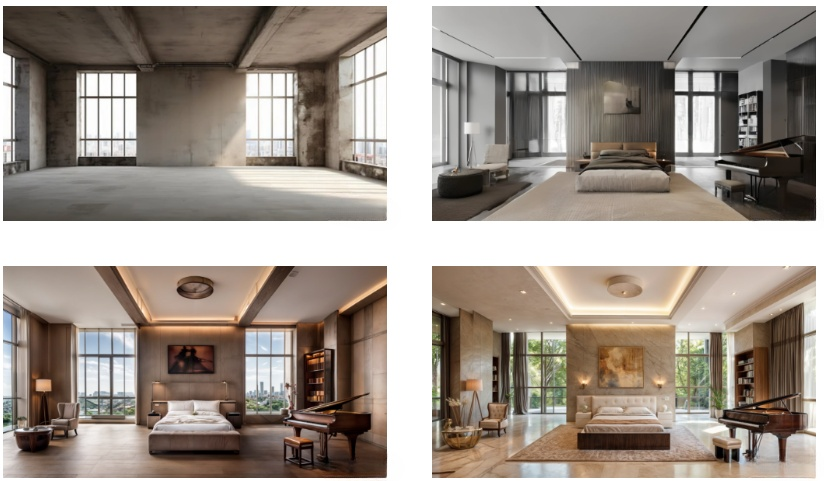

3.4.3 Interior Design

Img2img technology is transforming the field of interior design, offering a precision and flexibility that text-to-image processes can’t match. In interior design, where every detail matters, from the texture of the curtains to the color of the walls, img2img stands out as the ideal tool. Starting with a basic layout, it allows designers to adjust and fine-tune the shapes and positions of furniture and decor with ease, transforming initial sketches into stunning visual representations of interior spaces.

Take a look at the following example: I upload the original image, an undecorated, bare room. First, I used a combination of collaging furniture images and generative fill to place the furniture I wanted inside: a spacious double bed, armchairs, a small table, a floor lamp, a bookshelf, and a piano. Next, it was time to experiment with my preferred decorating styles. The first attempt was an industrial style, which I found a bit too monotonous and lacking the feeling of “home.” So, curiously, I tried a commercial hotel style next, and it indeed looked like a well-decorated hotel. On my third attempt, I tried a modern glamorous style, and this time, I thought the room’s style and layout looked very harmonious, making for a very elegant bedroom. Now, I can take this image to discuss with a professional interior designer. The whole process required no special skills; just a few clicks of the mouse gave me a completely different interior design.

This capability is invaluable not just for personal experimentation but also for professional collaboration. Designers can effortlessly share their visions with clients, making it easy to illustrate potential changes or alternatives. It offers a dynamic platform for exploring different styles, facilitating a more interactive design process. With img2img, concepts and drafts evolve into fully realized designs swiftly, bridging the gap between imagination and the tangible reality of a space.

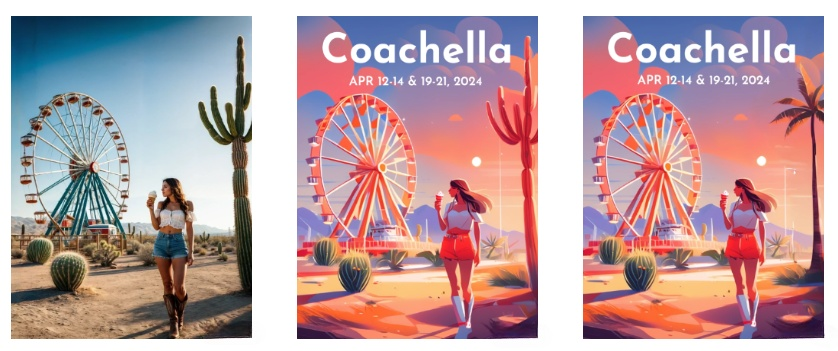

3.4.4 Poster Design

In the world of poster design, img2img technology is changing how creative people turn their ideas into reality. This technology offers a smooth way to move from a starting image to a finished, attention-grabbing poster, unlike the txt2img method, which might not fully capture a poster’s detailed theme.

Let’s look at using img2img for designing a poster. The Coachella music festival is held from April 12 to 14 and from April 19 to 21 this year. Let’s say we’re making a poster for Coachella. First, we’d gather inspiration online, looking at related materials and designs to form our plan. If we were using txt2img, we’d have to describe our idea from scratch, hoping we write a clear, comprehensive prompt that AI understands to get the image we want. This process can be challenging and unpredictable.

With img2img, we start with an image we’ve already found, choose the aspects we like, or start from an existing image to transform it into an artistic, unique poster. In our example, I loved an initial realistic image because it captured Coachella’s iconic elements: the Ferris wheel, mountainous terrain, bushes, and a stylishly dressed girl. Using img2img, I transformed it into a vibrant, dreamlike poster. However, the cactus on the right side of the finished image looked odd and didn’t fit Coachella’s vibe, so I used generative fill to replace it with palm trees, which looked more natural.

This way, I efficiently created a simple yet beautiful poster, ready to be modified and produced in batches if needed. Utilizing img2img technology revolutionizes poster design, combining efficiency with a boundless creative potential. This approach simplifies the design process, allowing for rapid iterations and the exploration of different aesthetics without starting anew for each variation. It becomes an invaluable tool in creating simple yet stunning posters that can be easily modified and produced in batches. By enabling direct manipulation and refinement of images, designers can ensure their final output perfectly captures their vision, enhancing productivity and artistic freedom.

What’s more, img2img fosters a collaborative environment, where designers can share progress with clients or team members, incorporate feedback in real-time, and iterate towards a poster that exceeds expectations in conveying the intended message and emotional resonance. This technology not only shortens project turnaround times, crucial for meeting tight deadlines, but also opens up new avenues for creativity. Designers are empowered to transcend traditional boundaries, experiment with innovative visual solutions, and captivate audiences with their work.

4. Dzine: At the Vanguard of Img2img Innovation

4.1 Our Img2Img Engine

Recognizing the importance of img2img, we have dedicated ourselves to building a leading img2img engine. Our img2img engine is designed to ensure that the structure and content of images are accurately preserved and enhanced.

4.2 Elevating Visuals with Cutting-Edge Img2img Features

Dzine’s img2img engine is a leap forward in digital creativity, making it simpler and more intuitive for everyone to transform images. Whether you’re drawn to the detailed textures of classical artwork or the striking lines of contemporary design, Dzine simplifies the process of altering an image’s mood and tone. Beyond mere editing, it empowers you with the tools to freely explore and refine your artistic ideas, offering a blend of precision and creative liberty in every design.

| Features | Description |

| AI Filters | Apply styles to images, going beyond surface changes to preserve original image data. |

| Combine Images with AI | Merges and styles images for fashion and design, harmonizing mismatched elements. |

| Local Editing Tools | Includes generative fill, remove, and select for precise image manipulation. |

| Background Removal and Change | Seamlessly removes and changes backgrounds, integrating subjects into new environments. |

| Enhancement and Upscaling | Improves image clarity and details, preserving the essence while correcting flaws. |

| Image Variations | Generates multiple versions of an image, supporting varied design iterations. |

4.2.1 AI Filters for Comprehensive Style Transformations

Dzine’s AI filters, epitome of Dzine’s img2img technology, allow users to apply a wide range of styles to their images. The term “filter” vividly reminds people of the filter function in traditional image editors. However, AI filters go beyond just surface-level alterations; It genuinely preserves the original image’s data characteristics during the diffusion process and present them in a different artistic style. So, what specifically can Dzine’s AI filters achieve?

In the above passage, we discussed img2img’s role through practical application scenarios like fashion design, interior design, etc. Here, let’s summarize img2img’s use cases from workflow directions:

4.2.1.1 From flat doodles to completed designs: Realize your ideas quickly

Like previously discussed, designers can swiftly transform sketches into refined designs. However, if you lack the advanced drawing skills like designers and have only simple doodle drawings, Dzine can still generate exquisite images for you. Dzine’s TikTok account showcases similar examples, where you can see its effectiveness. In this workflow, Dzine primarily simplifies design steps and rapidly brings your creative ideas to life.

4.2.1.2 From ordinary to artistic: Spark creativity and fit for various purposes

Another design process involves transforming already realistic, complete pictures into more artistic ones, which are suitable for various application scenarios. Often, our inspirations truly come alive through images. Starting with an existing picture saves heaps of effort. It’s like having a shortcut to your imagination. Dzine boasts a rich and ever-expanding style library, allowing you to experiment with your ideas swiftly and in a cost-effective manner. In this fast and intuitive generation process, you can simply discover inspiration. You can quickly create stylistically diverse derivative images while maintaining the original image’s structure and elements.

Here are more specific use cases:

Dzine simplifies the transformation of 2D character images into 3D effects with just a few clicks. This is particularly exciting for fans and creators of anime around the world. It’s more than just an interesting tech trick; it has the potential to revolutionize how anime merchandise is created.

Making figurines, especially the step where you create a prototype, is known to be tough. The goal is to make a 3D figure that still looks like its 2D version, which often requires lots of back-and-forth, making the process slow and expensive.

Dzine changes the game here. Thanks to its advanced technology, it can make accurate 3D versions of anime characters and even lets users tweak details like clothes and props right on the image. This makes the whole process much smoother and faster.

To put it more specifically, consider the production and sales process. For manufacturers of collectible figures, it’s crucial to determine if a figure’s design and attire will appeal to their audience. Imagine if there were a technology that could quickly generate preview images of 2D character figures, allowing manufacturers to survey preferences or conduct pre-order and pre-sale events. This would greatly benefit figure retailers by enabling demand-driven production and boosting sales. Take Goku, a favorite anime character among many fans, as an example. I used Dzine’s AI filter in a realistic style to transform the 2D, line-drawn Goku into a lifelike, detailed 3D version that closely resembles an actual figure. Additionally, I added a cool halo effect behind Goku, further enhancing the figure’s appeal.

If you’re interested in seeing how this works in more detail, I recommend checking out a video by piximperfect and Dzine’s blog. Both of them give a clear step-by-step guide on how to use this feature, showing how Dzine is making it easier for everyone to bring their creative ideas to life.

Check Dzine’s blog here:

How to Give 2D Graphics a 3D Makeover in Seconds

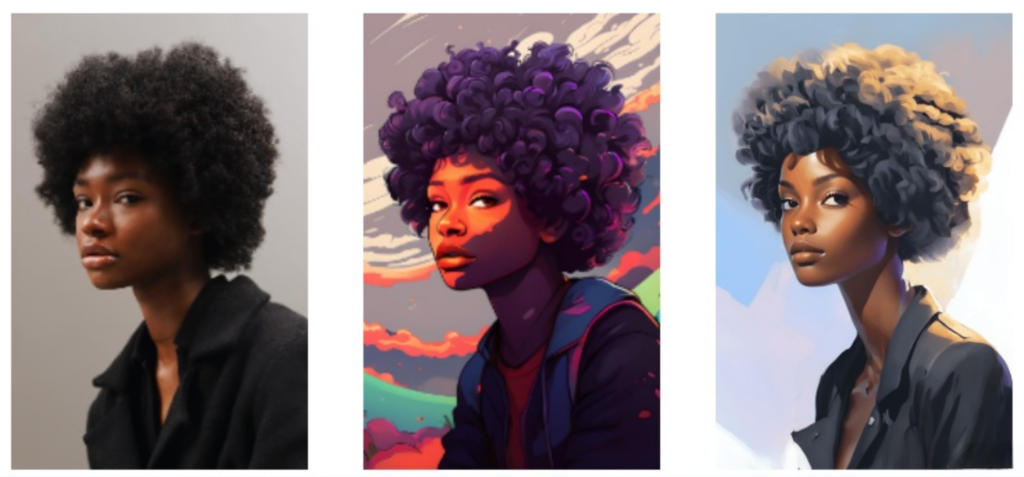

- Avatar Creation

Dzine’s img2img feature stands out for its ability to create personalized avatars with ease. Unlike txt2img, which begins with a blank slate requiring detailed descriptions, img2img starts with an existing photo, making the process more intuitive and the outcomes more accurately reflective of real-life features. Within Dzine, you have the liberty to experiment with a range of styles, from realistic portraits and playful cartoons to gentle watercolors, offering a broad canvas for your imagination.

The example below showcases the ease with which you can experiment and find your preferred avatar style with just a few clicks. Even the man in the first picture isn’t real; it is also transformed to be realistic from an anime image. Which style captures your imagination?

- Illustration and Comic Book Creation

Leveraging Dzine’s AI filters opens up a world of creativity, allowing you to transform scenes from movies or TV shows into compelling comic book panels or whimsical illustrations, perfect for crafting your own comic books or children’s picture books. This innovative approach enables fans and creators to reinterpret cinematic storytelling through visual art, providing a unique medium to relive and share beloved narratives.

The process is intuitive and accessible, inviting artists of all levels to explore their creativity without the steep learning curve associated with traditional art software. With a variety of filters at your disposal, you can play with different artistic styles, from the exaggerated expressions and bold colors characteristic of comics to the delicate and dreamy aesthetics of children’s book illustrations. This versatility encourages experimentation, allowing stories to be told in innovative and engaging ways, and opens up new possibilities for narrative expression.

Dzine’s technology meticulously considers every detail from the original scenes, ensuring that the essence of characters, the depth of their emotions, and the complexity of their settings are translated into visually stunning art. Moreover, Dzine’s capacity to turn film and TV scenes into art makes it an exceptional tool for generating unique content that stands out.

4.2.2 Combine Images with AI

In the examples above, covering fashion and interior design, we utilized Dzine’s capability to combine and style images. This feature allows you to place your favorite images or elements from those images onto a canvas in just the right spot. Up to this point, it resembles traditional collage making, but Dzine takes it a step further by enabling an overall img2img transformation. Even if your collage has mismatched styles or lighting, Dzine’s AI filter can harmonize these elements by converting their styles to make them blend seamlessly together.

The combine images function has vast applications in fashion design, useful for both designers and enthusiasts alike. You can merge elements from your mood boards and outfit inspirations onto a base image of a model (which can also be yourself!) and transform them into elegant fashion illustrations.

On a more commercial note, Dzine’s image combination can also be employed to design unique apparel prints. The following video demonstrates a workflow starting with an idea, combining these concepts, and swiftly generating a series of graphics. Similarly, you can leverage this feature to create exquisite illustrations with Dzine.

4.2.3 Local Image Editing with Generative Fill and Remove

Dzine’s img2img engine, at its core, revolutionizes the way we approach image creation and modification. With its suite of local editing tools, including generative fill, remove, and select functions, Dzine enables users to engage in detailed manipulations of images with precision and creativity. These tools open up a plethora of possibilities for users to refine, customize, and enhance their visual projects, making the engine an indispensable asset for both amateur and professional creators alike.

4.2.3.1 Generative Fill

The generative fill tool stands out for its ability to intelligently add to images, whether filling in backgrounds, extending patterns, or creating new elements that blend seamlessly with the original composition. This tool leverages Dzine’s deep understanding of context and aesthetics, ensuring that additions feel natural and coherent. It’s particularly useful for designers looking to complete a scene or add complexity to their visuals without starting from scratch.

Here’s a closer look at how generative fill works and how it’s being implemented:

- Text-Driven Generative Fill

Dzine offers an intuitive way to modify specific image areas: just brush over the area you wish to change and type in what you want. This method makes it easy to add or alter image elements in a way that closely matches your vision, blending them seamlessly into the scene.

- Sketch-Referenced Generative Fill (Planned)

This upcoming feature will let users create new elements based on a sketch. Just provide a reference sketch, and Dzine will craft matching elements, narrowing the gap between your initial ideas and the final image.

- Brushstroke-Based Generative Fill (Planned)

Also under development, this approach will generate elements based on your brushstrokes, interpreting the shape, direction, and colors you’ve applied. This promises a tactile, hands-on way to shape your image, making your adjustments feel more direct and personal.

Whether through text prompts, reference sketches, or direct brushstrokes, Dzine aims to provide a toolkit that adapts to various creative workflows, ensuring that every tweak or addition precisely enriches the artwork, just as the creator envisioned.

4.2.3.2 Generative Remove

On the flip side, the remove tool offers the capability to declutter or alter images by erasing unwanted elements. Whether it’s an intrusive background object or a blemish on a portrait, users can effortlessly clean up their images, maintaining focus on the desired subjects. This tool enhances the compositional quality, making images more striking and focused.

4.2.3.3 Auto-select Tool

The select tool further enhances Dzine’s local editing prowess, allowing users to isolate and modify specific parts of an image. From adjusting colors and textures to reshaping or repositioning elements, this tool gives users the freedom to tweak images to their exact specifications. It’s invaluable for creating dynamic compositions or for when precise adjustments are needed to bring a creative vision to life.

Together, these tools embody the essence of img2img’s capabilities, offering a comprehensive solution for image editing that goes beyond basic adjustments. They allow for the creation of detailed, nuanced compositions that would be difficult to achieve with traditional methods. With Dzine’s img2img engine, users have the power to transform their images into meticulously crafted works of art, pushing the boundaries of what’s possible in digital creativity.

4.2.4 Background Remove and Change

Dzine’s img2img engine transforms the task of removing and changing backgrounds into a seamless, one-click operation. This feature is especially valuable for placing objects against new, imaginative backdrops, dramatically altering the context and enhancing the visual narrative of the original image.

After effortlessly isolating the subject with Dzine’s precise background removal tool, users can dive into a creative journey, choosing from a rich library of backgrounds or crafting their own. The subsequent img2img process then integrates the subject into its new environment with stunning realism. It considers the nuances of lighting, shadow, and perspective to ensure the subject doesn’t just sit on the new background but interacts with it, creating a cohesive and believable scene.

This capability opens up endless possibilities for users to experiment with various scenarios, enabling a quick exploration of how different backgrounds can shift the mood and message of the image. Dzine’s approach to background modification is not merely a technical feat; it’s a gateway to boundless creative expression, allowing even those with minimal editing experience to achieve professional-quality results with ease.

4.2.5 Enhancement and Upscaling:

Dzine’s Enhance and Upscaling features rejuvenate images by improving clarity, enriching details, correcting colors, and eliminating flaws, thereby preserving the image’s unique essence. Unlike text-to-image methods that may falter with intricate enhancements, Dzine ensures that every pixel contributes to a visually appealing outcome. This capability is crucial for professionals and hobbyists alike, seeking to breathe new life into their visuals without compromising their originality.

4.2.6 Image Variations

Dzine’s Image Variation, powered by img2img technology, optimizes the creative workflow by effortlessly generating multiple versions of an original image. This feature is invaluable for projects that demand a variety of design iterations, such as evolving brand logos or innovative product concepts. It allows designers to swiftly explore different aesthetics, facilitating a dynamic and efficient design process. This capability is especially crucial when fine-tuning visual identities or presenting multiple options to clients, ensuring a broad spectrum of ideas can be visualized and assessed without the need for time-consuming manual adjustments. With Dzine’s Image Variation, the pathway from concept to completion becomes smoother, enabling a richer exploration of creative possibilities.

5. A Canvas Without Limits: Envisioning the Future

Navigating through AI-driven design, we’ve embarked on a journey from txt2img technology to the more direct image manipulation offered by img2img. This progression highlights a pivotal evolution in how we bring our visions to life, underscoring the transformative power and potential of img2img in creating visuals that resonate more closely with our original intent.

Img2img technology, by allowing direct refinements and alterations on images, gets past many tricky parts of txt2img. It offers a seamless blend of creativity and precision, facilitating an iterative design process that is both dynamic and intuitive.

Here at Dzine, we’re not just about making new tech; we want to help spark everyone’s creativity with AI. We are committed to making advanced design tools universally accessible, ensuring that everyone, from professionals to hobbyists, can realize their most ambitious creative visions. The img2img function stands as a testament to this commitment.

As Dzine evolves, we warmly invite the creative community to delve into the extensive possibilities our img2img engine offers. Join us, share your insights, and help shape Dzine’s future. Stay connected by signing up for updates, and engage with us and our community on platforms like X, Instagram, Reddit, YouTube, and our Discord channel, where you’ll find a hub of creativity and collaboration. Together, let’s push the boundaries of img2img technology, transforming the way we create and share art in this digital era.

References

- Saharia et al. Photorealistic Text-to-Image Diffusion Models with Deep Language Understanding. arXiv:2205.11487.

- Ramesh et al. Hierarchical Text-Conditional Image Generation with CLIP Latents. arXiv:2204.06125.

- Rombach et al. High-Resolution Image Synthesis with Latent Diffusion Models. arXiv:2112.10752.

- Oppenlaender et al. The Creativity of Text-to-Image Generation. arXiv:2206.02904.

- Ninareh et al. Resolving Ambiguities in Text-to-Image Generative Models. arXiv:2211.12503

- Zhang et al. Adding Conditional Control to Text-to-Image Diffusion Models. arXiv:2302.05543.